As all of us are adopting LLMs, we should make LLM integration design strategy easy and simple to get the most out of our service operations – AIOPS, monitoring and correlation, service BOTs, and overall SRE strategy; in our devops for code or artifact generation; in our quality assurance strategy and script generation; overall platform engineering, provisioning, configuration and script updates etc. Of course there are more, the above are just low hanging use-cases that is pedestrian.

In the subsequent period, I would like to discuss more specifics on how ChatGPT 3.5, Llama, Anthropic, AWS integration strategy are being put to work.

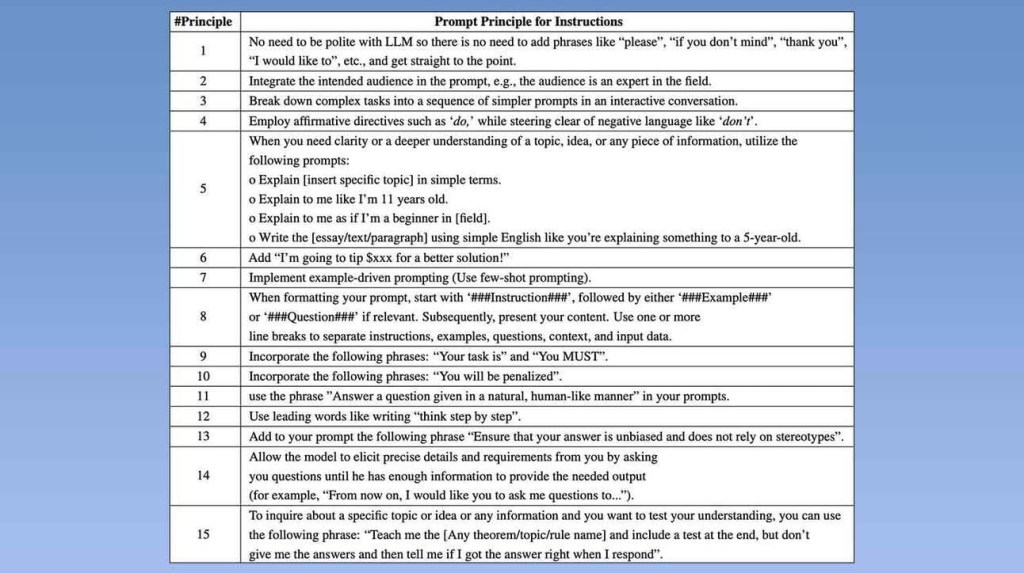

Image source: VILA Lab, published by RunDown AI

Leave a comment